Real-time Pose and Shape Reconstruction of Two Interacting Hands With a Single Depth Camera

Abstract

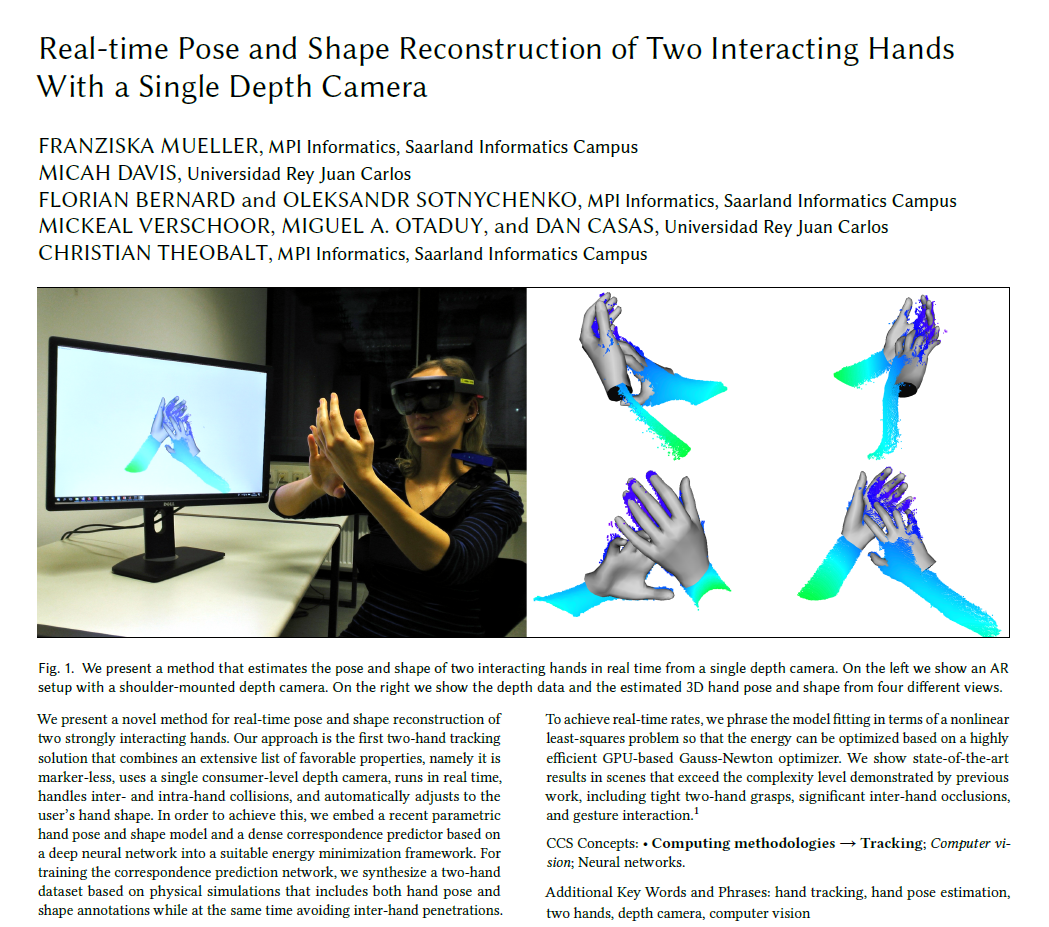

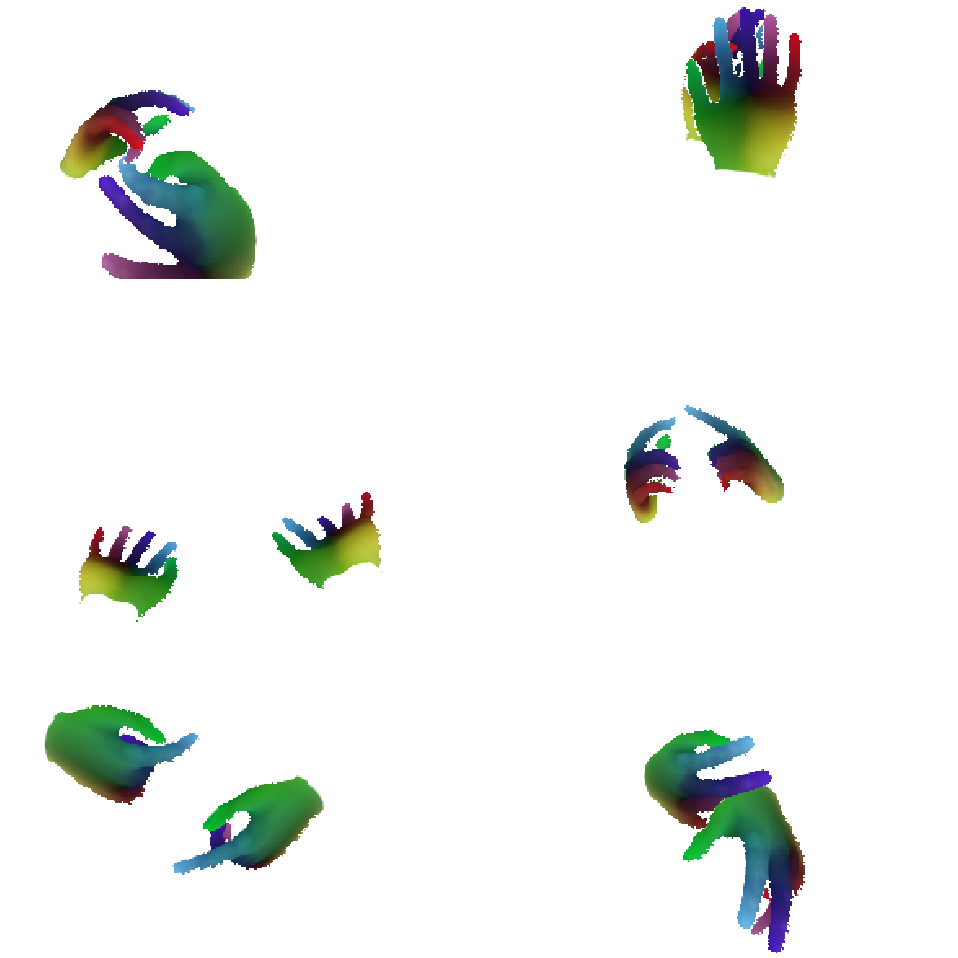

We present a novel method for real-time pose and shape reconstruction of two strongly interacting hands. Our approach is the first two-hand tracking solution that combines an extensive list of favorable properties, namely it is marker-less, uses a single consumer-level depth camera, runs in real time, handles inter- and intra-hand collisions, and automatically adjusts to the user's hand shape. In order to achieve this, we embed a recent parametric hand pose and shape model and a dense correspondence predictor based on a deep neural network into a suitable energy minimization framework. For training the correspondence prediction network, we synthesize a two-hand dataset based on physical simulations that includes both hand pose and shape annotations while at the same time avoiding inter-hand penetrations. To achieve real-time rates, we phrase the model fitting in terms of a nonlinear least-squares problem so that the energy can be optimized based on a highly efficient GPU-based Gauss-Newton optimizer. We show state-of-the-art results in scenes that exceed the complexity level demonstrated by previous work, including tight two-hand grasps, significant inter-hand occlusions, and gesture interaction.

Downloads

* The original presentation was created in Keynote. The PowerPoint (pptx) version of the slides was exported from Keynote. Hence, the quality might be degraded (e.g. animations).

Citation

@article{mueller_siggraph2019,

title={{Real-time Pose and Shape Reconstruction of Two Interacting Hands With a Single Depth Camera}},

author={Mueller, Franziska and Davis, Micah and Bernard, Florian and Sotnychenko, Oleksandr and Verschoor, Mickeal and Otaduy, Miguel A. and Casas, Dan and Theobalt, Christian},

journal={ACM Transactions on Graphics (TOG)},

volume={38},

number={4},

year={2019},

publisher={ACM}

}

Acknowledgments

The authors would like to thank all participants of the live recordings.

The work was supported by the ERC Consolidator Grants 4DRepLy (770784) and TouchDesign (772738).

Dan Casas was supported by a Marie Curie Individual Fellowship (707326).

This page is Zotero and Mendeley translator friendly.

Imprint/Impressum | Data Protection/Datenschutzhinweis