Dexter 1

Motions

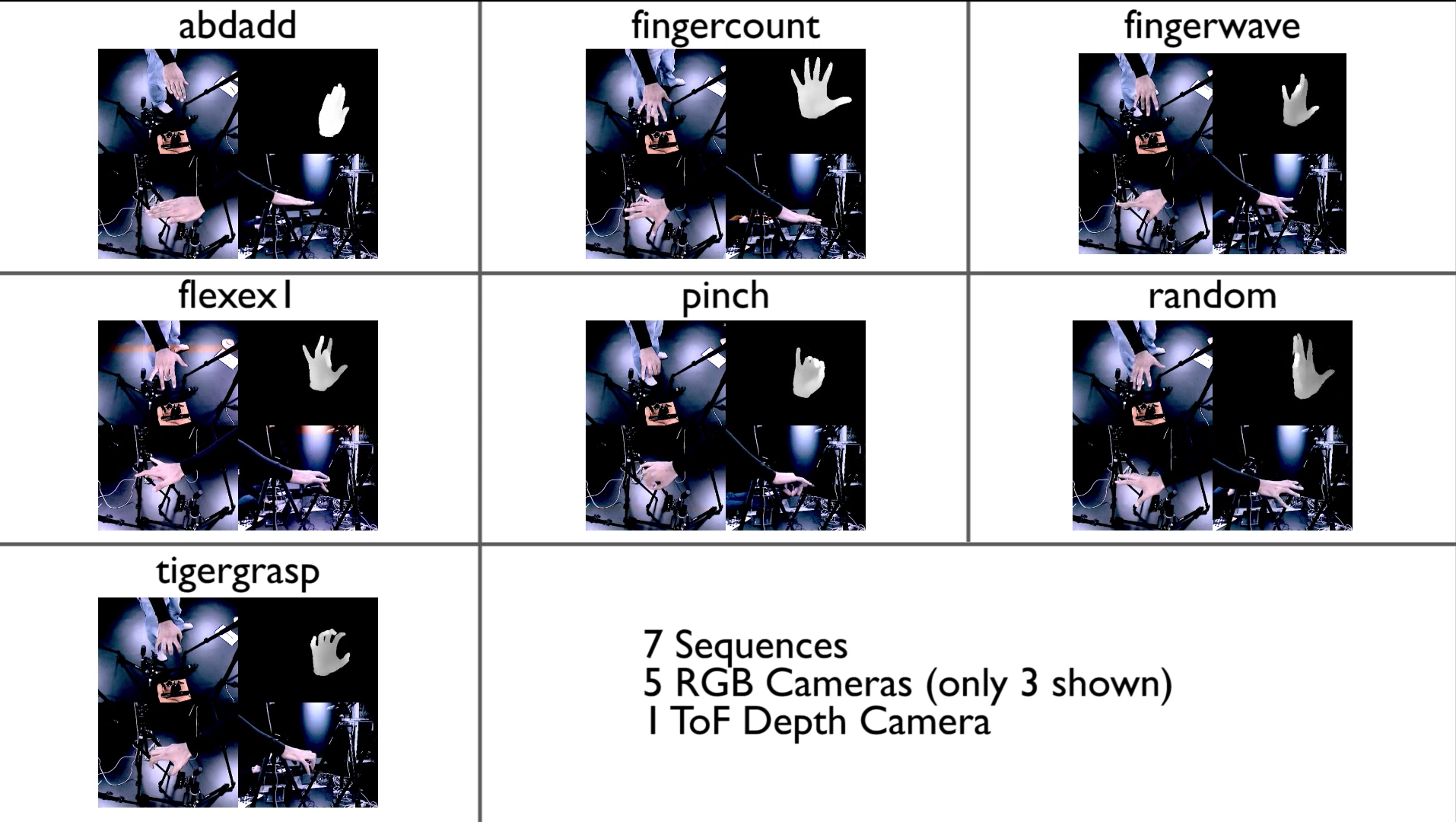

Dexter 1 consists of 7 sequences of challenging, slow and fast hand motions that covers the abduction-adduction and flexion-extension of the hand. Roughly the first 250 frames in each sequence correspond to slow motions while the remaining frames are fast motions. All sequences are with a single actor's right hand.

Data

- RGB: 5 Sony DFW-V500 RGB cameras at 25 fps

- Depth: 1 Creative Gesture Camera (Close-range ToF depth sensor) at 25 fps

- Depth: 1 Kinect structured light camera at 30 fps

- Ground Truth: Manually annotated on ToF depth data for 3D fingertip positions

Downloads

- Compressed Tarball: Single file (tar.gz, 2.9 GB), SHA-256:

da3405357efa09c126a8f8fc3d01b121adc7ba260a22b66e9c4a4e9c5bc37479

- Browse: Link

- Camera Setup: [ Picture 1 | Picture 2 ]

License

If you use this dataset in your work, you are required to cite the following paper. BibTeX, 1 KB

@inproceedings{handtracker_iccv2013,

author = {Sridhar, Srinath and Oulasvirta, Antti and Theobalt, Christian},

title = {Interactive Markerless Articulated Hand Motion Tracking using RGB and Depth Data},

booktitle = {Proceedings of the {IEEE} International Conference on Computer Vision ({ICCV)}},

url = {http://handtracker.mpi-inf.mpg.de/projects/handtracker_iccv2013/},

numpages = {8},

month = Dec,

year = {2013}

}

Evaluation

The following table lists the average fingertip error for several published algorithms. The error is computed as the average Euclidean distance of all estimated 3D fingertip locations from the 5 ground truth 3D positions. (The palm center annotation is not used in all except one method.)

| Algorithm | Average Error [mm] |

|---|---|

| Sridhar et al., ICCV 2013 | 13.1[1] 31.8[2] |

| Sridhar et al., 3DV 2014 | 24.1 |

| Franziska Mueller, 2014 (offline method) | 15.2 |

| Sridhar et al., CVPR 2015 | 19.6 |

[2] Palm center not used, all frames

If you would like to include your algorithm results here, please contact us.

Sequence Details

Please click on the image above for a larger preview of the links below for a video preview.- adbadd: Abduction-adduction of all fingers together. Please note the spelling.

- fingercount: Counting using each finger and the thumb.

- fingerwave: Waving of fingers.

- flexex1: Flexion-extension of all fingers.

- pinch: Pinching while moving around.

- random: Random motions with articulation.

- tigergrasp: Making a posture like a tiger grasping.

Dataset Structure

The root directory (containing this file) consists of 3 sub-directories.- calibration: Contains information for calibrating the cameras for intrinsic and extrinsic parameters. We recommend using the MATLAB calibration toolbox.

- preview: Montage video preview of 3 cameras and structured light data for all sequences.

- data: All the data resides here.

- SEQUENCE_NAME

- multicam: Data from RGB cameras stored as PNG files in separate directories.

- tof: The ToF data.

- confmap: Confidence map as 16-bit PNG.

- depth: Depth map as 16-bit PNG.

- rgb: Empty.

- uvmap: Emtpy.

- vertices: Point cloud from depth map stored in PCD format (see pointclouds.org). Units are mm.

- structlight: Unsynchronized structured light data in ONI format.

- annotations: Contains manually annotated data and a preview of annotations. See README.txt inside the directory for more details.

- SEQUENCE_NAME

Acknowledgments

We thank Thomas Helten for helping with data capture.