Generative Model-Based Loss to the Rescue: A Method to Overcome Annotation Errors for Depth-Based Hand Pose Estimation

Abstract

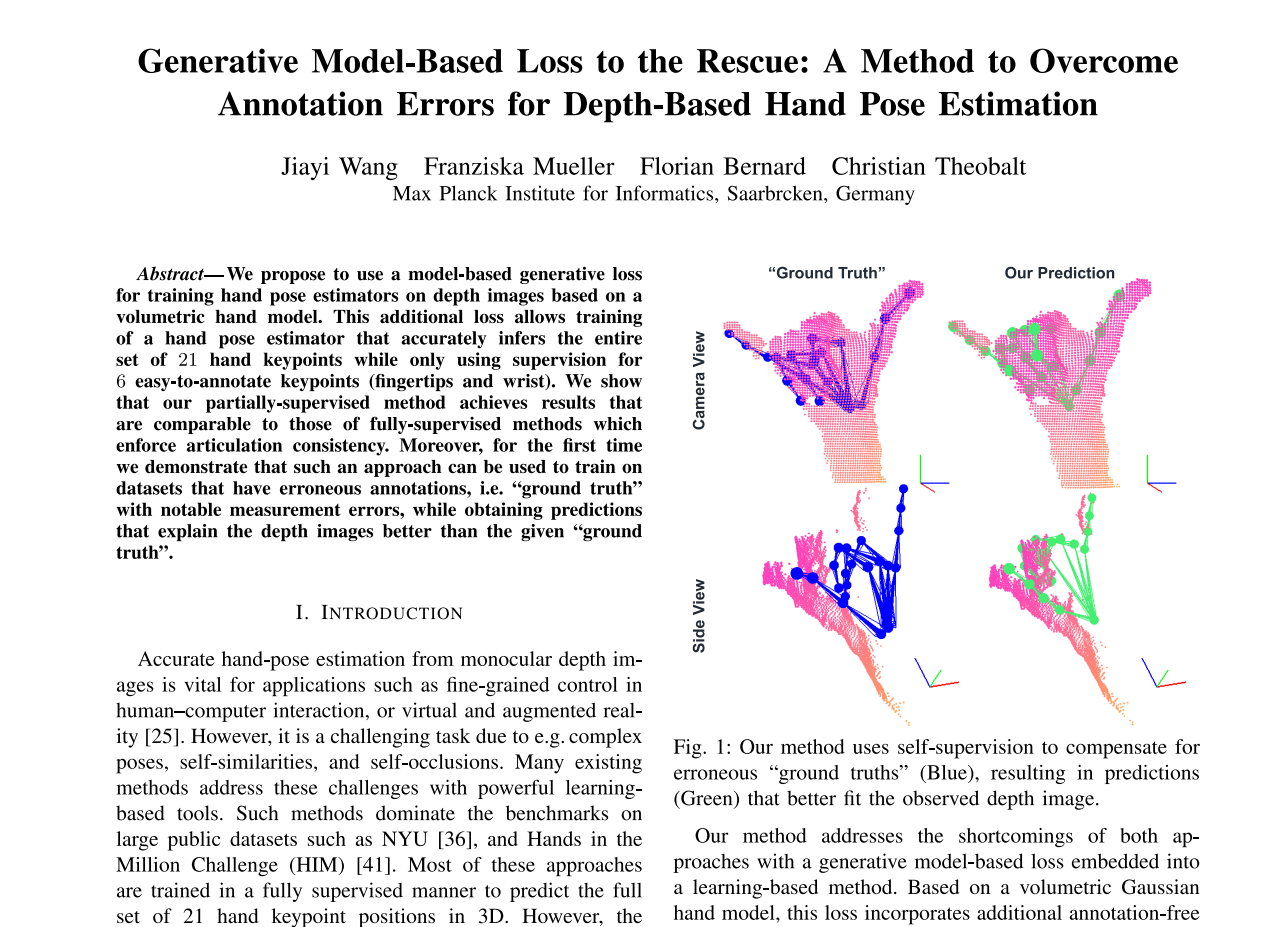

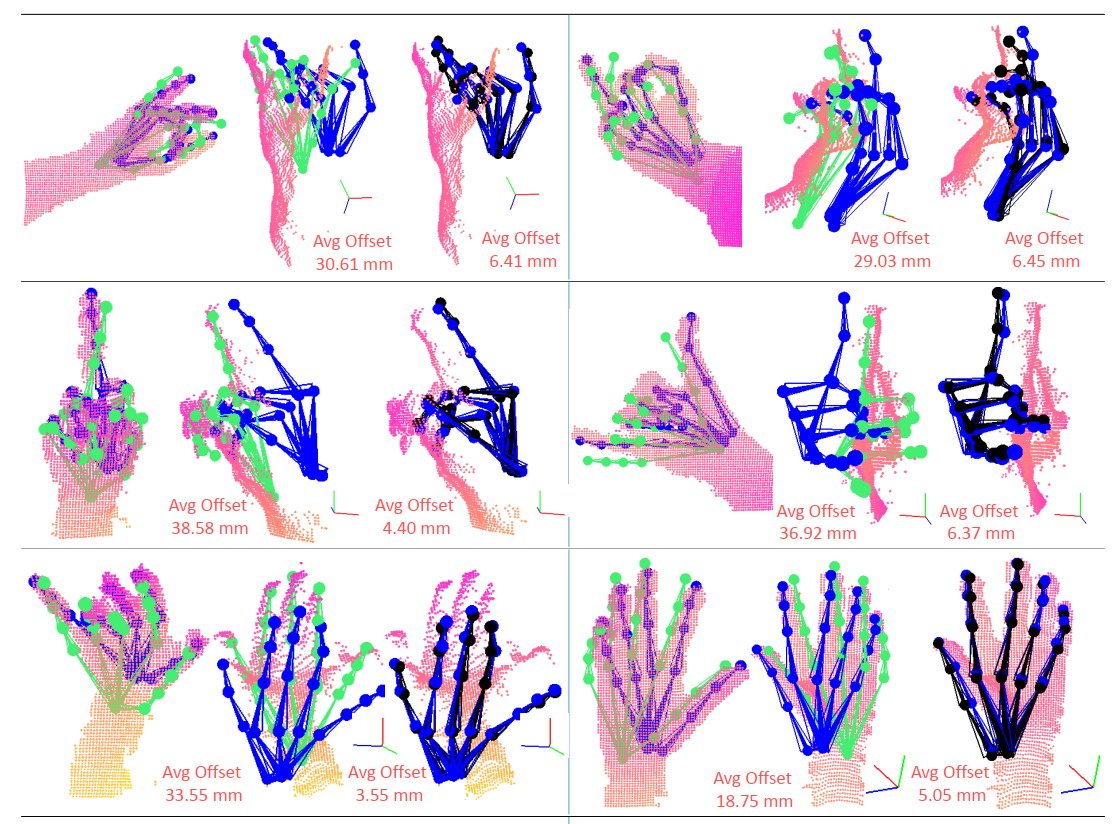

We propose to use a model-based generative loss for training hand pose estimators on depth images based on a volumetric hand model. This additional loss allows training of a hand pose estimator that accurately infers the entire set of 21 hand keypoints while only using supervision for 6 easy-to-annotate keypoints (fingertips and wrist). We show that our partially-supervised method achieves results that are comparable to those of fully-supervised methods which enforce articulation consistency. Moreover, for the first time we demonstrate that such an approach can be used to train on datasets that have erroneous annotations, i.e. "ground truth" with notable measurement errors, while obtaining predictions that explain the depth images better than the given "ground truth".

Downloads

Citation

@INPROCEEDINGS {wangFG2020,

author = {J. Wang and F. Mueller and F. Bernard and C. Theobalt},

booktitle = {2020 15th IEEE International Conference on Automatic Face and Gesture Recognition (FG 2020) (FG)},

title = {Generative Model-Based Loss to the Rescue: A Method to Overcome Annotation Errors for Depth-Based Hand Pose Estimation},

year = {2020},

volume = {},

issn = {},

pages = {93-100},

keywords = {hand pose estimation;annotation bias;generative loss;depth image},

doi = {10.1109/FG47880.2020.00013},

url = {https://doi.ieeecomputersociety.org/10.1109/FG47880.2020.00013},

publisher = {IEEE Computer Society},

address = {Los Alamitos, CA, USA},

month = {may}

}

Acknowledgments

The authors would like to thank all participants of the HandID dataset.

The work was supported by the ERC Consolidator Grants 4DRepLy (770784).

This page is Zotero and Mendeley translator friendly.

Imprint/Impressum | Data Protection/Datenschutzhinweis